3 min read

In the world of artificial intelligence (AI) and chatbots, misconceptions abound. Yet, you cannot afford to let them hold you back. Alberto Chierici, Head of Product and co-founder, is on a mission to bust some myths.

In the last few years, AI and chatbots have become buzzwords synonymous with digital transformation. Press often focuses on the pilots and prototypes, framing them as overnight wonders. Yet, many of these projects have only been made possible by years of work. The resulting prototype is just the tip of the iceberg.

Here are five of the biggest misconceptions about this technology in the industry.

1. All bots use AI

Right now, there are three categories of chatbot in the market.

- Script-driven bots. These map bot and user interactions into a decision-tree structure, with the aim of moving your customer from A to B. Conversational experience design is key here, and will vary according to your use-case. For the insurance industry, we at Spixii specialise in designing compliant conversational experiences.

- Generational-text bots. These use statistical models to mirror human conversation and speech, generating text and dialogue patterns. Generally, these tend to be extremely complex and almost always not compliant.

- The in-betweens. These are a bit of both, blending a little natural language processing when recognising categories or entities from users' text messages and funnelling the user to the relevant dialogue flow. We have developed some of these, selecting compliant scripts from statistical models that optimise user paths for customer segmentation or use-case specific optimisation metrics.

2. Chatbots are easy and quick to develop

Yes - you can develop bots quickly. But in our experience, they fail fast.

It is all too easy to create a 'bad' bot. For instance, you can upload commonly asked questions and answers within an hour to create a FAQ chatbot to many commercial bot frameworks, such as Microsoft's. While this proves a concept and functions as a nice tool, it is a nice-to-have; often, this adds little to no value to your bottom line - at least in the insurance context.

Chief Analytics and Data Officer of Morgan Stanley, Jeff McMillan, discusses this here. To make your chatbot implementation successful, it is key to align it with your wider operational strategy, objectives and vision - particularly if you are looking to transform a mammoth-like firm like an insurance company.

Spixii CXD (Conversation Experience Designer) in action

Spixii CXD (Conversation Experience Designer) in action

3. Deep learning is a panacea

Some things in life fix everything: Mum's chicken soup being one of them. Unfortunately, deep learning is not one of those things.

Deep learning is simply one of the many algorithms and methodologies available within the wider family of machine learning algorithms. However, in production systems, sometimes the easier, traditional algorithms can achieve your business goals. For instance, logistic regression and/or TFIDF (Term Frequency - Inverse Document Frequency) work for many tasks.

4. AI algorithms can understand and interpret all the messy data

If you are using a legacy system and facing vast amounts of disorganised data, AI is not a magic wand. The most important thing is to collect the right data. This way, you can also avoid historic biases.

Not all data is suitable for the problem at hand. For instance, if you are training NLP for the insurance context, you can access libraries filled with standard predicted answers. But for your specific insurance product or customer, your answers may be far from 'standard'. It is key to build a strong data acquisition strategy with a data pipeline and compliant cleaning process.

A common problem in AI development is context. Expert understanding of the context and impact of a solution in that context, coupled with the wider context of strategic and operational business objectives, is essential.

Explore the ethical implications of this further here.

5. AI programmes itself

AI boils down to a set of machine learning and automation algorithms, which are not necessarily statistically driven.

In very simplistic terms, algorithms work this way:

- Establish a suitable equation (or a set of equations) to best predict a future outcome

- Provide past data to calibrate the equation

- Instruct the machine to update the equation's parameters as new data comes in (this is how it 'learns')

No machine can think by itself how to write code - or, at least not without being programmed by a human first.

No machine writes equations out of nothing. Again, not without a human telling it.

No machine can decide or think how to automatically update those equations' parameters. This is done by aliens. Wait. Humans.

Ultimately, the 'how' always come from a human. The 'what', such as an equation or parameter, can change thanks to the machine but always because that machine is optimising metrics designed and chosen by people.

AI takes a great deal of work, and it is our responsibility as humans to design it.

So what is AI?

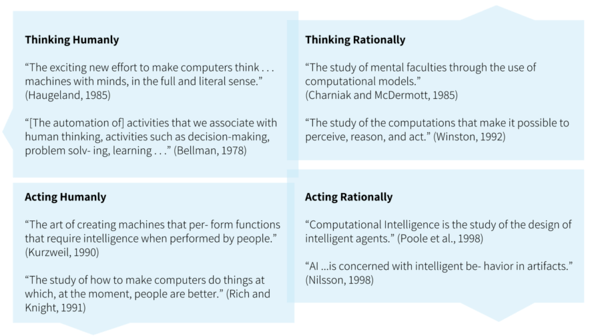

Ultimately, we can define AI as an intelligent agent capable of rationally achieving a given goal. The quadrant below illustrates the four schools of thought. Here at Spixii, we fall into the 'Acting Rationally' camp.

Does it take the glamour away from chatbots and AI?

Maybe.

But does it mean they are any less powerful?

Not at all.

The four schools of thought (http://aima.cs.berkeley.edu/)